Cognitive Modeling

- 6597 words

- Estimated Reading Time: 31 minutes

Thinking Thoughts#

Elisabeth, Dogmeat, Lieutenant Kim Kitsuragi… These are my friends in other worlds. The worlds in which I fight dictators, roam an irradiated wasteland, solve murders, and discover what type of man I really am.

They are the NPC companions available in my favorite games. While I shy away from multiplayer games, I enjoy games that have good AI companions. I don’t know what that says about me.

For me, one of the hooks of a good game are the NPCs. Do they seem real to me?

Do they seem like they are alive and living a life in the game world?

I enjoy the phase in adventure and and role playing games where you’ve discovered

all the quest giving NPCs but you haven’t exhausted their dialog options yet.

The first time I played Skyrim I was delighted when I discovered that all of the towns people kept a schedule. They weren’t at their shops 24/7. The fact that you can befriend or alienate NPCs with your actions is fun.

How do these NPCs work? Can I design a simulation model that enables creating agents like the ones in my favorite games? I started working on the Agent’s Playground because I wanted an engine that enables exploring these questions without all of the complexities of building out an entire game world.

This article details the progress I’ve made on building a cognitive model for autonomous agent simulations.

Computational Models#

Before diving into designing my own agent models I reviewed the history of autonomous agents and tried to establish a foundation of the various techniques for building such things.

Automata#

The concept of automata is where I started. Automatons are an old idea. Mechanical automatons that could do calculations and play games have existed for hundreds of years. In the computer realm, they were first formalized by Warren McCulloch and Walter Pittsin in their 1943 paper A Logical Calculus Immanent in Nervous Activity. In 1955-56 G.H. Mealy and E.F. Moore, generalized the theory to much more powerful machines in separate papers. They respectively developed the concept of Mealy and Moore machines.

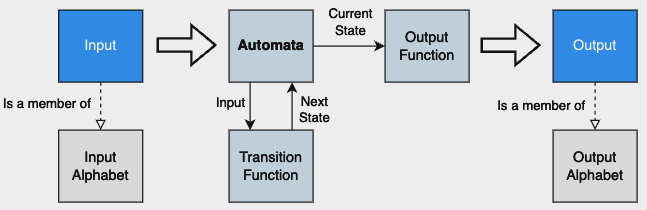

An automata is defined as:

Any thing or being regarded as having the power of spontaneous motion or action. –Huxley.

[1913 Webster]A self-moving machine, or one which has its motive power within itself; – applied chiefly to machines which appear to imitate spontaneously the motions of living beings, such as men, birds, etc.

[1913 Webster]

Automata is synonymous with automaton, which seems to roll of the tongue easier and fills my head with images of steam punk machines and old Tom Swift stories.

Automatons have been studied for years and are formalized in automata theory.

My understanding is that an automaton is composed of 6 properties.

| Property | Explanation |

|---|---|

| It has inputs. | It runs when given a sequence of inputs in a series of steps. Each input is from a set of symbols. The full set of possible inputs are called the input alphabet. During any step, the sequence of the input symbols are called words. |

| Has distinct states. | An automata can inhibit a set of states (e.g. awake, sleeping, hungry). An automata can only exist in a single state at a time. |

| It can transition between states. | When the automata is provided new input, it transitions to its next state. The mechanism that causes the transition is called a transition function. |

| It generates output. | When a transition occurs an output function produces symbols. The full set of symbols that can be output are called the output alphabet. |

| It is iterative. | The automation lives in a loop. It processes input symbols of the input word and transitions from state to state until all input is processed. |

| It can be stopped. | When an automaton stops, it is said to halt. Any state which causes the automaton to halt is called the final state. |

In code, an automaton can be defined as a 5 member tuple.

# If we have sets for the possible inputs, outputs, and states

input_alphabet = {}

output_alphabet = {}

possible_states = {}

# And we have functions for transitioning state and

# generating outputs.

def next_state(input):

"""The transition function."""

...

def next_output(current_state):

"""The output function."""

...

# Then we can clump it all together in a tuple and voilà,

# we've got an automaton.

automata = (

input_alphabet,

output_alphabet,

possible_states,

next_state,

next_output

)

So how is this useful? Automatons can be used to construct machines. For example, we can use the 5 member tuple definition of an automation to model a cell in Conway’s Game of Life as an automaton.

If you’re not familiar with Conway’s Game of Life, the rules are simple. A cell

is either “alive” (1) or “dead” (0). The state transition table below are the

rules for how a cell transitions between states.

| Current State | Input | Next State |

|---|---|---|

| 1 or 0 | -1 | Halt |

| 0 | Not 3 | 0 |

| 0 | 3 | 1 |

| 1 | 2 | 1 |

| 1 | 3 | 1 |

| 1 | < 2 | 0 |

| 1 | > 3 | 0 |

What does this look like if we use the automaton model to implement a cell in Conway’s Game?

from enum import Enum

import sys

from typing import (

Any,

Generic,

List,

NamedTuple,

Callable,

TypeVar

)

# First define our generic Automata tuple and related types.

AutomataState = TypeVar('AutomataState')

InputAlphabetMember = TypeVar('InputAlphabetMember')

OutputAlphabetMember = TypeVar('OutputAlphabetMember')

# Let's use a named tuple to demonstrate the definition of an automaton.

class Automata(NamedTuple,

Generic[AutomataState, InputAlphabetMember, OutputAlphabetMember]

):

input_alphabet: frozenset[InputAlphabetMember]

output_alphabet: frozenset[OutputAlphabetMember]

possible_states: frozenset[AutomataState]

next_state: Callable[[InputAlphabetMember, AutomataState, frozenset[InputAlphabetMember]], AutomataState]

next_output: Callable[[AutomataState, list[OutputAlphabetMember]], None]

# Define the possible states a Cell automaton can have.

class CellState(Enum):

Dead = 0

Alive = 1

# Define a function that is responsible for finding the

# next state of the automaton.

def find_next_state(

input_word: int,

current_state: CellState,

input_alphabet: frozenset[InputAlphabetMember]

) -> CellState:

if input_word not in input_alphabet:

msg = f'{input_word} is not in the input alphabet {list(input_alphabet)}'

print(msg)

return current_state

if input_word == -1:

print("Halting")

sys.exit()

next_state: CellState

if current_state == CellState.Alive:

next_state = CellState(int(input_word in {2,3}))

else:

next_state = CellState(int(input_word == 3))

return next_state

# Define a function that is responsible generating the

# automaton's output.

def print_next_output(

current_state: CellState,

output_alphabet: List

) -> None:

"""Print the correct output for the current state."""

print(f"Cell State: {output_alphabet[current_state.value]}")

# Finally, let's put it all together in a main loop.

def main():

valid_input = frozenset([0, 1, 2, 3, 4, 5, 6, 7, 8, -1])

possible_output = frozenset(['dead', 'alive'])

cell = Automata[CellState, int, str](

input_alphabet = valid_input,

output_alphabet = possible_output,

possible_states = frozenset(CellState),

next_state = find_next_state,

next_output = print_next_output

)

# Loop forever until the halt state is reached.

current_state: CellState = CellState.Dead

while True:

msg = f"Enter Something. Possible Options: {list(cell.input_alphabet)}\n"

input_word = input(msg)

try:

processed_input: int = int(input_word)

if processed_input in valid_input:

current_state = cell.next_state(

processed_input,

current_state,

cell.input_alphabet)

cell.next_output(current_state, list(cell.output_alphabet))

except ValueError:

print(f'{input_word} is not a valid input.')

if __name__ == '__main__':

main()

Limitations of this Approach

The above code works but demonstrates the limitations of basic automatons.

An automaton is quite dumb. It doesn’t have the ability to grow its input and

alphabets. It is completely deterministic. An automaton is never going to surprise

a user that knows the rules. It has no memory. The previous states cannot influence

the future states.

In some cases these limitations may be perfectly fine. A light switch for example doesn’t need to remember all the times it was on or off. If you put several of them together in a group you can create complex systems. Conway’s Game of Life demonstrates this with unpredictable emergent behaviors.

Types of State Management Solutions#

While automatons can be used to create simple machines they’re not a good fit for building NPCs in video games and simulations. Fortunately there are other computational models that extend the concept of automatons to be more powerful.

Here are a few examples listed in increasing computational power.

| Model | Details |

|---|---|

| FSA | Finite State Automata. This is what is demonstrated above. |

| FSM | Finite State Machines. FSMs are are class of FSAs. They come in a variety of flavors. The most famous are probably Mealy Machines and Moore Machines. |

| PDA | Push Down Automata are a class of FSMs that use a stack to enable more complex processing. |

| LBA | Linear Bounded Automata is a model that reads inputs from a fixed length tape and based on the input can perform a series of functions. LBAs can do more sophisticated work than PDAs or FSMs because they can rewind the tape. |

| Turing Machines | A Turing Machine is like an LBA but the input tape is theoretically infinite in length. |

FSMs and Turing Machines#

The various computational models listed in the above table all build on each other. For example, a Turing Machine is a Finite State Machine (FSM) but a FSM is not necessarily a Turing Machine.

FSMs in turn are an extension of FSAs. For a more examples on working with FSMs see Robert Nystrom’s chapter on the topic in his book Game Programming Patterns.

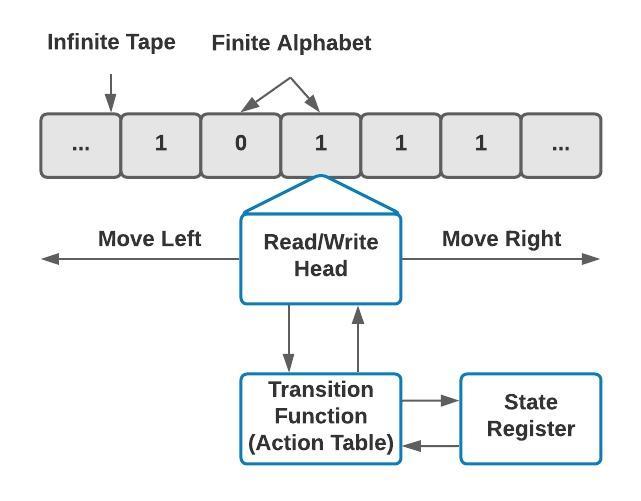

Of the various automaton classes, I find Turing Machines to be rather fascinating. A Turing machine is the most sophisticated class of automata. It is composed of multiple parts.

The Tape

The input of the Turing machine is traditionally modeled as a tape. The tape is an input stream that sequentially stores data. Conceptually, think of the tape as a paper ticker or a magnetic tape like in a cassette. It can be read in both directions. A key property of the tape is that it is considered to be infinite in length.

The tape is composed of fields, which are sequentially arranged. Each field in turn is composed of characters of a finite alphabet.

The Head

The tape is processed by a head moving in both directions over the tape. This head can read and write one character from the field over which the head resides.

State Register

All Turing machines are finite state machines. At any given point of time they are in a single state. Based on the input provided by the tape, the current state may change. The machine’s state is stored in the state register.

Transition Table

A Turing program is a list of transitions, which determine for the current state and the character that is being read by head:

- The next state

- A character which has to be written into the field under the head.

- A movement direction for the head – left, right or static.

Universal Turing Machine

Turing machines are powerful and can be used to simulate other machines. A Turing Machine that can simulate any other Turing Machine is called a Universal Turing Machine (UTM). This is also referred to as being Turing-complete.

Constructing a Turing Machine

We saw above that a basic automaton can be defined as a 5 part tuple.

Using the same convention, a Turing Machine can be defined as a 7 part tuple.

# Just like the automata, we define sets for the possible states,

# the tape alphabet, and the halting states.

possible_states = {}

tape_alphabet = {}

final_states = {}

# But, a Turing machine differs from the basic automaton with

# a few more concepts.

# The set of input symbols. These are a set of symbols allowed

# to appear in the initial tape contents.

input_symbols = {}

# The blank symbol. The only symbol allowed to occur on the tape

# infinitely often at any step during the computation

# Note: The blank_symbol is included in the tape_alphabet.

blank_symbol = ' '

# The starting state of the turing machine.

# Note: This is included in the possible_states set.

initial_state = None

# A function that specifies the next state to transited to,

# which symbol to overwrite the current symbol pointed by the

# head, and the next head movement.

def transition_function(current_state, input_symbol):

...

# Finally, we can collect these 7 components into a tuple to

# form a generic Turing Machine.

turing_machine = (

possible_states,

input_alphabet,

blank_symbol,

input_symbols,

initial_state,

final_states,

transition_function

)

Ok great… How is this useful? Turing machines are conceptual. The idea of an infinite “tape” separates them from the things we can build directly in reality. That said, we can use the model of a Turing machine to build all kinds of things.

If we replace the concept of a tape with memory and disk storage we can use the idea of a scanner with a state and transition table to build a lexer for example.

Can we construct NPCs from Turing Machines? The short answer is, sure. We can build anything with this model. That said, pragmatically a Turing Machine on it’s own isn’t a great fit for crafting NPCs.

So where does that leave me? On the spectrum of automatons FSAs are too simplistic and Turing Machines are too low level.

Ultimately, exploring the many, many different compute models led me to the conclusion that I want a very extensible Agent model. I want some agents to be dumb automatons and others to be able to leverage more sophisticated models such as PDAs, Behavior Trees, and HTN Planners.

With that perspective, I established my goals for the Agent model.

Establishing Design Goals#

The below table documents my top five design characteristics I want from my Agent model. I used these as a guide while evaluating design options.

| Priority | Design Characteristic | Explanation |

|---|---|---|

| 1 | Extensible | A simulation shall be able to replace any component of the agent model. |

| 2 | Emergence | Agents shall enable organizing complex behavior. |

| 3 | Object Permanence | Agents shall have mechanisms that enable remembering what they encounter. |

| 4 | Flexible Modeling | Agents should have an out of the box solution for driving both deterministic and non-deterministic behavior. |

| 5 | Configuration Driven | A simulation shall be able to control the parameters of the model via the scene file. |

Now that we’ve established the guiding design goals, let me tell you about how I tackled each one.

Agent Extensibility#

Extensibility in the Agent Playground is a bit more extreme than in a traditional app. Conceptually, there is the core engine which is used to run users’ simulations. A user in this context is a simulation developer. I want a user to be able to replace any aspect of the agent model without having to do much coding.

In an effort to support this I’m using two programming techniques.

- Contract enforcement via static duck typing.

- Runtime injection of dependencies.

Static Duck Typing#

Just like in my example of a basic finite state automaton and Turing Machine, I first started thinking about the agent model as an eight part tuple.

# An agent is the sum of its parts.

an_agent = (

agent_state # The internal state of the agent.

internal_systems # The subsystems that compose the agent.

identity # All of the agent's IDs used by the various engine components.

physicality # The agent's physical attributes.

position # All the attributes related to where the agent is spatially.

movement # Attributes used for movement.

style # Defines the agent's look.

memory # The memory store for the agent.

)

The Playground Engine should provide a default implementation for each of the eight components. But, each component should be replaceable in a simulation. Python protocols can help achieve this.

If you’re not familiar, protocols are similar to interfaces in Java and C# but with the caveats that:

- Implementing classes don’t need to inherit from the parent.

- Protocols can have method bodies.

Why protocols and not a base class or abstract class? Because I want to avoid inheritance based class hierarchies if I can. Type checkers will verify that instances have the same contract as the protocol but do not enforce that the instance be a subclass of the defining protocol call.

For example:

from typing import List, Protocol

# Define a few types for readability.

Color = str

Kilograms = float

# Define a duck's behavior contract.

class DuckLike(Protocol):

color: Color

weight: Kilograms

def quack(self):

print('Ducks say quack.')

def walk(self):

print('waddle waddle')

# Mallard implements the contract of DuckLike but doesn't

# inherit from DuckLike.

class Mallard:

def __init__(self, color: Color, weight: Kilograms):

self.color = color

self.weight = weight

def quack(self):

print('quack quack')

def walk(self):

print('Duck walking here.')

# Implement a duck but use the default method definitions.

class StiffTail(DuckLike):

def __init__(self, color: Color, weight: Kilograms) -> None:

super().__init__()

self.color = color

self.weight = weight

# Finally, let's put it all together in a main loop.

def main():

ducks: List[DuckLike] = [

Mallard('brown', 2.3),

StiffTail('red', 1.78)

]

for duck in ducks:

duck.quack()

duck.walk()

if __name__ == '__main__':

main()

If I run mypy against the above code everything checks out. From the static type checker’s perspective if it walks like a duck and talks like a duck then it must be a duck.

Naming things is always a challenge. For naming protocols I tend to use the convention of SomethingLike or SupportNameOfBehavior. This is from the perspective that protocols are a contract for a class’s behavior.

With this understanding of protocols, I define the contract for an Agent to be the following.

class AgentLike(FrameTick, Protocol):

"""Behaves like an autonomous agent."""

agent_state: AgentStateLike # The internal state of the agent.

internal_systems: AgentSystemLike # The subsystems that compose the agent.

identity: AgentIdentityLike # All of the agent's IDs.

physicality: AgentPhysicalityLike # The agent's physical attributes.

position: AgentPositionLike # All the attributes related to where the agent is.

movement: AgentMovementAttributes # Attributes used for movement.

style: AgentStyleLike # Defines the agent's look.

memory: AgentMemoryModel # The memory store for the agent.

# Excluding the method bodies for brevity...

def transition_state(self, other_agents: Dict[Tag, AgentLike]) -> None:

"""

Moves the agent forward one tick in the simulation.

"""

def tick(self) -> None:

"""

Required by FrameTick protocol.

Signifies the passing of a simulation frame.

"""

def agent_characteristics(self) -> AgentCharacteristics:

"""Bundles the agent characteristics."""

def reset(self) -> None:

"""Reset the agent state."""

def select(self) -> None:

"""Marks the agent as selected by the user or Simulation."""

def deselect(self) -> None:

"""Marks the agent as deselected by the user or Simulation."""

def face(self, direction: Vector, cell_size: Size) -> None:

"""Set the direction the agent is facing."""

def move_to(self, new_location: Coordinate, cell_size: Size) -> None:

"""Update the agent's location."""

def scale(self, amount: float) -> None:

"""

Applies a scaling factor to the agent's size along both axes.

"""

@property

def selected(self) -> bool:

"""Getter for the Agent's selected status."""

@property

def agent_scene_graph_changed(self) -> bool:

"""Indicates if the agent requires a scene graph update."""

@property

def agent_render_changed(self) -> bool:

"""Indicates if the agent requires a re-render."""

A simulation definition of an Agent can inherit from the AgentLike if the user wants to leverage the default implementation of the various methods but they don’t have to. They just need to maintain the contract defined by AgentLike.

Late Binding#

The second technique I use to help make the agent model extensible is delaying the binding of dependencies for the 8 agent components until runtime.

If a simulation doesn’t override it, the default behavior is that when an Agent is created an instance of the DefaultAgent is provisioned. I use constructor injection to pass all of the components in.

All 8 components of the agent model follow this pattern. Everything in the hierarchy of the agent model is a protocol with a default implementation that enables constructor injection.

# The default agent simply extends the AgentLike protocol and

# requires each component to be based in through the initialization

# function.

class DefaultAgent(AgentLike):

def __init__(

self,

initial_state: AgentStateLike,

style: AgentStyleLike,

identity: AgentIdentityLike,

physicality: AgentPhysicalityLike,

position: AgentPositionLike,

movement: AgentMovementAttributes,

agent_memory: AgentMemoryLike,

internal_systems: AgentSystemLike

) -> None:

self.agent_state = initial_state

self.style = style

self.identity = identity

self.physicality = physicality

self.position = position

self.movement = movement

self.memory = agent_memory

self.internal_systems = internal_systems

Agent Emergence#

A mental model that I use quite often is to approach organizations and IT systems with the technique of system thinking.

The basic idea is that complex system are the sum of their parts. They exhibit behavior that is not possible with the individual components.

People are complex systems composed of subsystems such as a skeleton, nervous system, lymphatic system, and so on.

As such, the AgentLike contract includes an internal_systems attribute. This is defined by the AgentSystemLike protocol which represents a hierarchy of systems that is scoped to the internal workings of an agent.

A system takes an agent’s characteristics as input, does work, and can produce one or more byproducts as outputs. These byproducts are stored locally in the system’s byproducts_store.

Byproducts can be passed up or down the hierarchy of systems. If they are passed up, they must be registered with the parent system.

The system implementer has the flexibility of adding custom logic before or after the system’s child processes are ran.

# Method bodies and private methods excluded for brevity.

class AgentSystemLike(Protocol):

"""Defines a hierarchy of systems."""

name: str # The unique name of the system.

subsystems: SimpleNamespace # Any subsystems this system has.

# The collection of byproducts this system produces.

byproducts_store: ByproductStore

# The list of byproducts this system can produce upwards.

byproducts_definitions: List[ByproductDefinition]

# The list of byproducts this system can produce that does

# not propagate up.

internal_byproducts_definitions: List[ByproductDefinition]

# Subsystems are registered. They are child nodes in the

# hierarchy of subsystems.

def register_system(

self,

subsystem: AgentSystem

) -> Self:

"""Add a subsystem."""

# The Process function is the heart of the AgentSystem

def process(

self,

characteristics: AgentCharacteristics,

agent_phase: AgentLifeCyclePhase,

other_agents: Dict[Tag, agent_spec.AgentLike],

parent_byproducts: dict[str, list] = {}

) -> None:

"""Orchestrates the processing of the system."""

# Do work in this system before invoking the subsystems.

self._before_subsystems_processed(

characteristics,

agent_phase,

parent_byproducts,

other_agents

)

# Invoke the subsystems.

self._process_subsystems(

characteristics,

agent_phase,

other_agents

)

# Do any work after the subsystems have been run.

self._after_subsystems_processed(

characteristics,

agent_phase,

parent_byproducts,

other_agents

)

# Collect any byproducts from the child subsystems.

self._collect_byproducts_from_subsystems()

def clear_byproducts(self) -> None:

"""

Clears the byproducts of the system.

It does NOT clear the subsystem's byproduct stores.

"""

Including the concept of a hierarchy of systems in the Agent model provides the ability to organize complex logic for agents.

The default functionality of how an Agent is intended to work is that once a frame each agent has its transition_state method called. This is responsible for figuring out what the agent’s next should be and performing any actions.

The default implementation is listed below. It shows how the state change relates to processing the agent’s internal systems.

class class AgentLike(FrameTick, Protocol):

def transition_state(

self,

other_agents: Dict[Tag, AgentLike]

) -> None:

"""

Moves the agent forward one tick in the simulation.

"""

characteristics = self.agent_characteristics()

self._before_state_change(characteristics)

self._pre_state_change_process_subsystems(characteristics, other_agents)

self._change_state(characteristics)

self._post_state_change_process_subsystems(characteristics, other_agents)

self._post_state_change(characteristics)

The out of the box life cycle for an Agent is very flexible. It provides hooks for a simulation author to define behavior on an Agent via the _before_state_change and _post_state_change methods or by using subsystems. If this life cycle isn’t a good fit for a simulation, the developer can always create their own transition_state definition.

Agent Object Permanence#

In the movie Memento, Leonard Shelby is a man on a mission. His wife has been murdered and he’s determined to track down her killers and enact his revenge. Just one problem. He can’t form new memories. Every few minutes he becomes disorientated and must determine where he’s at and what his next course of action is.

I like tinkering with finite state machines (FSMs). They’re easy for me to wrap my head around, simple to implemented, and straightforward to test. As great as a general FSM is, it is limited in the same way that poor Leonard is. It can’t remember what it has already processed.

There are different strategies for giving a FSM memory. The table below shows some of the

FSM variants and their storage solution.

| Expanded Finite State Machine | Storage Mechanism |

|---|---|

| Push Down Automata | FILO Stack |

| Stack Automata | Stack that is never popped. |

| Queue Automaton | FIFO Queue |

| Linear Bounded Automaton | Finite Tape |

| Turing Machines | Bi-Directional Infinite Tape |

What makes sense for a generic agent memory model?

I want the agent model to support a spectrum of simulation scenarios. Some agent’s may not have the capacity to remember anything while others may have a complex multi-tier memory model.

I’ll be honest. Trying to design a flexible memory model was an exercise in frustration. Ideally, the memory model should enable swapping out the model to enable any type of memory storage while at the same time:

- Provide static type checking with mypy.

- Avoid the need to use isinstance and issubclass.

- Avoid the need to use hasattr.

The challenge with this is different storage solutions require different structural contracts. Consider the difference between a Python list and a dict.

a_list = []

a_dict = {}

# Lists add items with append.

a_list.append(123)

# Dicts add items with __setitem__.

a_dict['a'] = 123

To have a generic contract that supports the various out of the box in memory storage types that Python provides is tricky. Not to mention the complexity of defining a contract that can accommodate custom storage types (i.e. DIY data structures).

Finally, there is the challenge of defining what a memory is. In the context of a simulation, a memory could be the output of an agent sensor (e.g. visual detection), it could be a skill an agent has learned. It could be the history of state changes.

The desire for flexibility drove the complexity of the abstract model. After trying a variety of approaches I settled on using a few techniques from functional programming. For an introduction to these techniques see my last article.

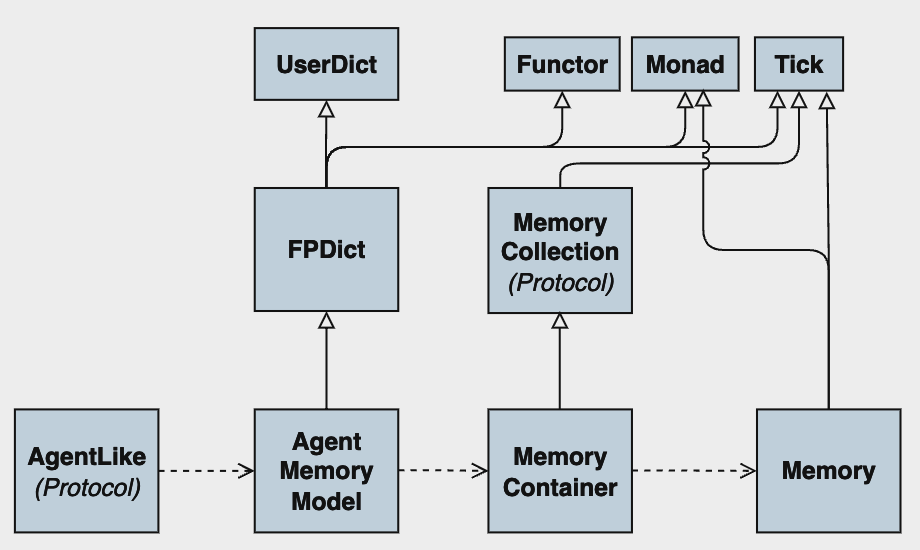

The image below shows the various classes that collectively define

an agent’s memory.

Memories#

The Memory class represents something that hangs around in the agent’s mind. To keep things generic, I’m using the Monad pattern to define a memory. Basically, that just means that Memory is a container that can wrap some other class and bind functions to it.

from typing import Generic, Hashable, TypeVar

from agents_playground.fp import Bindable, Monad

MemoryValue = TypeVar('MemoryValue')

MemoryMetadata = TypeVar('MemoryMetadata')

class Memory(Monad, Hashable, Generic[MemoryValue, MemoryMetadata]):

"""

Represents something an agent can retain in their brain.

This could be a sense, a fact, a skill, really anything.

"""

def __init__(

self,

core_memory: MemoryValue,

memory_metadata: MemoryMetadata | None = None

) -> None: ...

def wrap(

self,

core_memory: MemoryValue

) -> Memory[MemoryValue, Nothing]: ...

def unwrap(self) -> MemoryValue: ...

def metadata(self) -> Maybe[MemoryMetadata]: ...

def bind(

self,

next_func: Callable[[MemoryValue], Bindable[MemoryValue]]

) -> Bindable[MemoryValue]:

return next_func(self.unwrap())

Memory Container#

Memories are organized in memory containers. The MemoryContainer class is also a monad that wraps other things. In the case of the MemoryContainer it wraps generic storage classes.

Function programming patterns like monads are useful but they tend to spread across your codebase. To help keep things consistent I don’t allow the MemoryContainer to wrap just anything, rather I define a set of protocols that the MemoryContainer is allowed to wrap. These protocols mix the definitions of the Python collections.abc contracts with functional programming protocols.

from typing import TypeVar

import agents_playground.agents.memory.collections as memory_stores

from agents_playground.fp import Monad

# Bundle the various storage based protocols into a single type

# that the MemoryContainer can work with.

MemoryContainerStorage = TypeVar("MemoryContainerStorage",

memory_stores.Collection,

memory_stores.Sequence,

memory_stores.MutableSequence,

memory_stores.Set,

memory_stores.MutableSet,

memory_stores.Mapping,

memory_stores.MutableMapping,

memory_stores.SupportsTTL

)

# A MemoryContainer is a partition of agent Memories.

# The storage type is injected in the constructor.

class MemoryContainer(

memory_stores.Collection[MemoryContainerStorage],

Monad[MemoryContainerStorage]

):

def __init__(self, storage: MemoryContainerStorage) -> None:

self._storage: MemoryContainerStorage = storage

# Ignoring the other methods for brevity.

If you’re paying attention and are familiar with the Python standard library you may be thinking “Well gee Sam, why didn’t you just make the MemoryContainer work with the abstract classes defined in collections.abc?”.

The answer is I tried. Oh did I try…

I want to mix the contract definition of the storage classes with extra functions specific to my little app. I can’t just use the Python definitions because some of them are abstract classes. We can’t mix the chocolate of abstract classes with the peanut butter of protocols.

The work around is to create protocols that mimic the collection.abs abstract classes. Then we can mix them with other protocols.

The MemoryContainer class organizes instances of the Memory class. In the turn, the AgentMemoryModel organizes multiple instances of MemoryContainer.

from agents_playground.agents.memory.collections import SupportsMemoryMethods

from agents_playground.agents.memory.memory_container import MemoryContainer

from agents_playground.fp.containers import FPDict

class AgentMemoryModel(FPDict[str, MemoryContainer], SupportsMemoryMethods):

"""

Represents an agent's mind. What they're able to learn and remember.

Adheres to the AgentMemoryLike protocol contract.

"""

def __init__(self, dict = None, **kwargs) -> None: ...

def add(self, key: str, container: MemoryContainer) -> None:...

def tick(self) -> None:

"""

Notifies each memory container that the simulation has

advanced one frame.

"""

container: MemoryContainer

for container in self.data.values:

container.tick()

Agent Memory Model#

The AgentMemoryModel directly extends the FPDict class and implements the SupportsMemoryMethods protocol. “What’s the FPDict class?”, you ask? Well, I’ll tell you. I created functional versions of List, Dict, and Set. This enables having everything in the memory model behave in a consistent way.

The next question is that you may be wondering is about the tick method that is defined on the AgentMemoryModel class. All the classes in the memory model implement the Tick protocol. The Tick protocol is intended for classes that need to be notified that a frame in the simulation has passed. This is handy for implementing memory models that change over time, such has supporting learning and forgetfulness.

Now that we’ve got all the pieces defined it’s straightforward to build robust memory models that can change across simulations.

# A dumb agent that can't remember anything.

dumb_agent = DefaultAgent(memory = AgentMemoryModel())

# A sophisticated agent that has sensory memory, working memory,

# and long term memories.

complex_agent = DefaultAgent(

memory = AgentMemoryModel(

sense_memory = MemoryContainer(FPList[Memory]()),

working_memory = MemoryContainer(TTLStore[Memory]()),

long_term_memory = MemoryContainer(FPSet[Memory]()))

)

This works well enough that I can live with it for the time being. However, I’m pondering how to make this more extensible.

My design OCD is flared up because I’m using a concrete class for AgentMemoryModel. It is the only dependency that AgentLike has that isn’t a protocol.

This is one of those situations where I take a deep breath and remind myself that abstractions aren’t reality. They’re just a representation of some concept. I’m sure the agent memory model will continue to evolve as I build more complex simulations.

TTLStore#

In the last example a new class is used, the TTLStore. This is a custom container that ejects items when their time to live has expired. This enables creating memory models in which memories have some kind of event after a certain number of frames have passed in the simulation.

The Counter class is used to count down from an initially specified TTL value. When the TTL expires a bound function is run.

An optional custom action can be called for each item in the store on every frame tick.

from collections.abc import Collection

from math import inf

from typing import Any, Callable, Dict, TypeVar, cast

from agents_playground.agents.spec.tick import Tick

from agents_playground.counter.counter import Counter, CounterBuilder

def expire(store: Dict[Any, Counter], item: Any) -> None:

"""The function to call when the TTL is done."""

store.pop(item, None)

def do_nothing(**kwargs) -> None:

"""A pass through function used to simplify defaults."""

return

TTLStoreItem = TypeVar('TTLStoreItem')

INT_INFINITY: int = cast(int, inf)

class TTLStore(Tick, Collection[TTLStoreItem]):

"""

A container that automatically removes items with their time to live expires.

Items must be hashable.

"""

def __init__(self) -> None:

self._store: Dict[TTLStoreItem, Counter] = {}

def store(self,

item: TTLStoreItem,

ttl: int = INT_INFINITY,

tick_action: Callable = do_nothing) -> None:

"""

Stores an item with a TTL. If the item already exists,

then it's TTL countdown is reset to the new ttl.

Args:

- item: The item to store. This must be hashable.

- ttl: The number of ticks the item will be retained.

"""

if item in self._store:

self._store[item].start = ttl

self._store[item].reset()

else:

self._store[item] = CounterBuilder.integer_counter_with_defaults(

start=ttl,

min_value=0,

min_value_reached = expire,

decrement_action = tick_action

)

def tick(self) -> None:

"""Decrements the TTL of all the items in the store by 1."""

items = list(self._store.keys())

for item in items:

self._store[item].decrement(store = self._store, item = item)

def ttl(self, item: Any) -> int:

"""Returns the time to live for an item. Throws KeyError if the item doesn't exist."""

return cast(int, self._store[item].value())

Cognitive Processing#

The last aspect of the agent memory model is internal thinking. How can a simulation enable agents that have internal thoughts? The answer is to use systems.

We’ve seen already how AgentLike depends on AgentSystemLike to allow constructing agents with hierarchies of internal systems. When systems are processed the AgentMemoryModel is available for accessing or storing memories.

Flexible Modeling#

This blog post throws the word model around quite a bit. The fourth design goal for the Agent model is that it must enable flexible modeling. But what does that even mean?

Modeling in this context is about being able to whip up a simulation that has agents that exhibit some kind of behavior. Now as far as behavior goes there are two broad types, deterministic and non-deterministic.

The AgentLike protocol enables injecting an implementation of AgentStateLike which can be used for modeling both deterministic and non-deterministic behavior.

from typing import Protocol

from agents_playground.agents.spec.agent_action_selector_spec import AgentActionSelector

from agents_playground.agents.spec.agent_action_state_spec import AgentActionStateLike

from agents_playground.agents.spec.agent_characteristics import AgentCharacteristics

class AgentStateLike(Protocol):

# An agent can only exist in a single state.

current_action_state: AgentActionStateLike

last_action_state: AgentActionStateLike | None

# An action selector decides the next action to take (i.e. state to be in).

action_selector: AgentActionSelector

# States are transitioned between based on the provided

# agent characteristics (e.g. location, memories, mood, goals)

def transition_to_next_action(self, agent_characteristics: AgentCharacteristics) -> None:...

def assign_action_state(self, next_state: AgentActionStateLike) -> None:...

def reset(self) -> None:...

The benefit of AgentStateLike is twofold. First it provides a clear place to store all of the stateful aspects of an agent. Second, it enables injecting an AgentActionSelector.

The AgentActionSelector is responsible for encapsulating the logic of transitioning from one state to the next.

# The contract for AgentActionSelector is very simple.

# It defines the next_action method that given a state

# and agent characteristics determines the next Agent state.

class AgentActionSelector(Protocol):

def next_action(

self,

agent_characteristics: AgentCharacteristics,

current_action: AgentActionStateLike

) -> AgentActionStateLike: ...

Agent FSMs#

Deterministic finite state machines can be built with the MapAgentActionSelector class. The MapAgentActionSelector uses a dictionary to map states to their next state.

class MapAgentActionSelector(AgentActionSelector):

def __init__(

self,

state_map: dict[AgentActionStateLike, AgentActionStateLike]

) -> None:

self._state_map: dict[AgentActionStateLike, AgentActionStateLike] = state_map

def next_action(

self,

agent_characteristics: AgentCharacteristics,

current_action: AgentActionStateLike

) -> AgentActionStateLike:

return map_get_or_raise(

self._state_map,

current_action,

MapAgentActionSelectorException(

f'The Agent state map does not have a registered state named {current_action}'

)

)

Fuzzy Agents#

Deterministic agents are great for simple simulations or for defining basic machines. However, things get more interesting when there is a bit of randomness thrown into the mix.

The FuzzyAgentActionSelector class is the non-deterministic sibling to the MapAgentActionSelector class. Rather than using a simple transition map, the FuzzyAgentActionSelector maps states to instances of AgentActionStateRulesSet.

class FuzzyAgentActionSelector(AgentActionSelector):

def __init__(self, state_map: dict[AgentActionStateLike, AgentActionStateRulesSet] = {}) -> None:

self._state_map: dict[AgentActionStateLike, AgentActionStateRulesSet] = state_map

def next_action(

self,

characteristics: AgentCharacteristics,

current_action: AgentActionStateLike

) -> AgentActionStateLike:

rule: AgentActionStateRulesSet = map_get_or_raise(

self._state_map,

current_action,

Exception(f'No AgentActionStateRulesSet mapped to state f{current_action.name}.')

)

return rule.evaluate(characteristics)

The AgentActionStateRulesSet class provides flexibility in modeling state transition rules. Each state can have many possible transition rules defined. When evaluating the rule set it will evaluate them in the order they’re defined and will select the first one that has all of its criteria met.

Rule Use Cases

Transition rules have the following possible behavior.

- Cut and Dried: Transition rules are explicit. THIS state transitions to THAT state.

- Conditional: A rule has a condition that must evaluate to True for further consideration.

- Probabilistic: A rule has an associated probability (i.e. weighted coin flip) that must resolve for further consideration.

- Fuzzy: A rule can have multiple possible states associated with it. Each state has a probability. In this case both the condition and total rule possibility must be true.

With that many possible behaviors it would be easy to end up with a complicated solution. Fortunately, the desired outcome can be accomplished with a NamedTuple.

class AgentStateTransitionRule(NamedTuple):

"""

Responsible for encapsulating non-deterministic state

transition rules.

"""

state: AgentActionStateLike # The current state.

# The next state or possible next states.

transition_to: AgentActionStateLike | Tuple[AgentActionStateLike, ...]

# An optional condition that must evaluate to True for

# this rule to apply.

condition: Callable[[AgentCharacteristics], bool]

# A weighted coin that can be used to determine

# if the rule is applied.

likelihood: Coin

# Optional cumulative weights that determine the distribution

# to use if there are multiple possible next states.

choice_weights: Tuple[float, ...]

The below code snippet demonstrates how the transition rules are evaluated by the AgentActionStateRulesSet class.

from more_itertools import first_true

from random import choices

class AgentActionStateRulesSet(Protocol):

"""Responsible for evaluating the next state to transition to."""

rules: List[AgentStateTransitionRule]

no_rule_resolved: AgentStateTransitionRule

# The evaluate method find the the first rule that has all of

# its criteria met. Note that is uses the first_true function

# from the more_itertools package to simplify the code.

def evaluate(

self,

chars: AgentCharacteristics

) -> AgentActionStateLike:

"""Determine which rule to run."""

transition_rule: AgentStateTransitionRule = first_true(

self.rules,

default = self.no_rule_resolved,

pred = lambda rule: rule.condition(chars) and rule.likelihood.flip())

return self._process_transition(transition_rule, chars)

def _process_transition(

self,

transition_rule: AgentStateTransitionRule,

characteristics: AgentCharacteristics

) -> AgentActionStateLike:

if isinstance(transition_rule.transition_to, tuple):

# Find the next state using the distribution specified

# by the cumulative weights.

return choices(

population = transition_rule.transition_to,

cum_weights = transition_rule.choice_weights

)[0]

else:

return transition_rule.transition_to

Configuration Driven#

The last design goal for the Agent model is that is should be configuration driven. At the moment, this is the weakest part of the implementation.

I’ve written previously about how the Agent Playground leverages TOML as the scene definition language. TOML is good for quickly organizing lists or dictionaries of data but its not super expressive.

I know I want to replace TOML but I haven’t landed on what yet. To that end, I’ve invested in exposing the state transition rules in the Scene definition files but not the Agent memory model.

The below table indicates the current state of configuration

support for the Agent model. Memory and Style are the dimensions

that are currently not configurable.

| Model Component | Capability | Scene File Support |

|---|---|---|

| Agent State | Define Agent States | Yes |

| Agent State | Deterministic State Transition Rules | Yes |

| Agent State | Non-deterministic Transition Rules | Yes |

| Internal Systems | Assign Systems | Yes |

| Identity | ID based lookups | Yes |

| Identity | Dynamically create internal IDs. | Yes |

| Physicality | Specify Agent Size | Yes |

| Physicality | Specify View Frustum | Yes |

| Position | Specify Agent’s location. | Yes |

| Movement | Specify simulation movement attributes. | No |

| Style | Set stroke_thickness | No |

| Style | Set stroke_color | No |

| Style | Set fill color | Yes |

| Style | Set aabb_stroke_color | No |

| Style | Set aabb_stroke_thickness | No |

| Memory | Register memory containers | No |

| Memory | Pre-populate memories | No |

Items that are not exposed in the Scene definition file are controlled in simulations by writing custom Python code.

Wrapping Up#

And that, is my the current state of my Agent Model. This blog post represents a body of work that spanned several months due to only being able to spend a few hours a day on the effort.

Some resources that were really helpful while working on it are:

- The FSM focused papers on AI Wisdom.

- The Game AI Pro series of books.

- The chapter on state in the Game Programming Patterns book.

In the future I want to enhance the model to provide easy ways to assemble Behavior Trees, HTN Planners, and Markov Chains.

I’m especially interested in creating simulations where the agents develop emotions, have goals and perhaps leverage a utility system.

But those are all challenges for another day.

Until next time…

- Sam